Yoga pose perfection using Deep Learning: An Algorithm to Estimate the Error in Yogic Poses

Published version on “Journal of Student Research”: https://www.jsr.org/hs/index.php/path/article/view/2140

Abstract

Even with lots of attention and work in the computer vision and artificial intelligence field, human body pose detection is still a daunting task. The application of human pose detection is wide-ranging from health monitoring to public security. This paper focuses on the application in yoga, an art that has been performed for over a millennium. In modern society yoga has become a common method of exercise and there-in arises a demand for instructions on how to do yoga properly. Doing certain yoga postures improperly may lead to injuries and fatigue and hence the presence of a trainer becomes important. Currently, the research surrounding pose estimation for yoga mainly discusses the classification of yogic poses. In this work, we propose a method, using the Tensorflow MoveNet Thunder model, that allows real-time pose estimation to detect the error in a person’s pose, thereby allowing them to correct it.

Introduction

Pose estimation and classification is a subject that has been extensively researched and therefore has made great advances in recent years. Current classification models detect the poses but don’t account for the accuracy of a pose. An artificial intelligence-based application might be useful to identify yoga poses and provide personalized feedback to help individuals improve their poses [5]. Deep learning approaches provide a more straightforward way of mapping the structure instead of having to deal with the dependencies between structures manually. We employ the Tensorflow MoveNet Thunder model for pose estimation in this work.

Methods

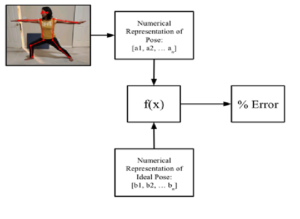

To determine the quantitative error in a person’s pose, a pose must need to be defined in a numerical sense so that a calculation can be done to find a numerical error.

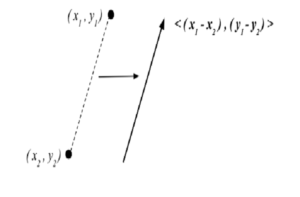

For a yoga pose, the most important information is the angle between certain body parts [18]. Pose Estimation provides information about a person’s pose in coordinates and then uses them to draw lines on devices used for the detection. Coordinates can be used to form vectors and then be used to find corresponding angles between adjacent vectors. Hence, we use an array of angles as means of describing one’s pose. To find the error, a basis or “correct pose” needs to be used to compare against the user’s pose. As there is now a way to determine poses in a numerical sense, two arrays can be used to define the current pose and the “perfect” pose. After establishing the poses, a calculation can be done to find the overall resulting error of the current pose in comparison to the selected “perfect” pose.

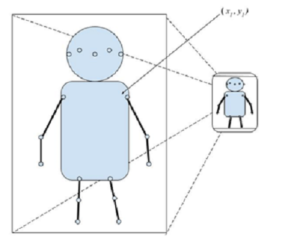

MoveNet is a pose estimation model that detects the pose with 17 key points in the human body through images or video sequences. These joint locations or key points are indexed by “Part ID” which is a confidence score whose value lies in the range of 0.0 and 1.0, with 1.0 being the greatest. The MoveNet model’s performance varies depending on the device and output stride [14]. The MoveNet model is invariant to the size of the image, thus it can predict the pose position accurately. In this work, we (i) train the Pose estimation algorithm using datasets of asanas to find the “perfect pose”, (ii) propose an algorithmic method to calculate the error between perfect pose and the current pose, and (iii) show the results of the model in real-time pose correction. Conclusions end the paper.

Please read the detail paper with the link below: https://drive.google.com/file/d/1RHI9RmRNWq-dXwGS33xdSlGQzVod6EdO/view?usp=sharing